An initial promise of the Compute Express Link (CXL) protocol was to put idled, orphaned memory to good use, but as the standard evolved to its third iteration, recent product offerings have been focused on memory expansion.

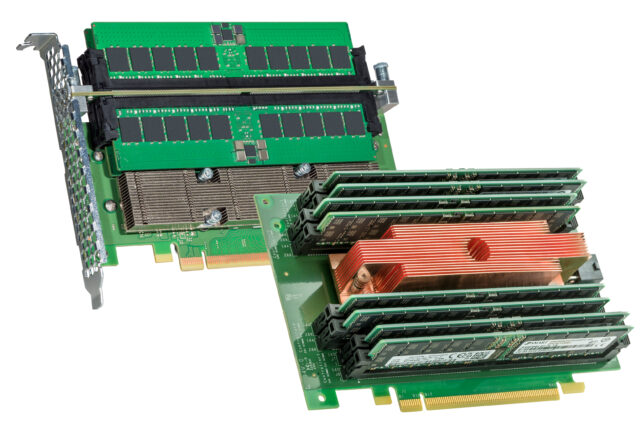

SMART Modular Technologies recently unveiled its new family of CXL-enabled add-in cards (AICs), which support industry standard DDR5 DIMMs with 4-DIMM and 8-DIMM options. The AICs allow up to 4TB of memory to be added to servers in the data center. The company has spent the last year putting together these products with the aim of making them plug and play.

SMART Modular’s AICs are built using CXL controllers to eliminate memory bandwidth bottlenecks and capacity constraints and aimed at enabling compute-intensive workloads like AI, machine learning (ML) and high-performance computing (HPC) uses—all of which need larger amounts of high-speed memory that outpace what current servers can accommodate.

The introduction of SMART Modular’s AICs comes at a time where the company is seeing two basic needs emerging, with the near-term one being a “compute memory performance capacity gap.”

The other trend is memory disaggregation. The problem with memory disaggregation has been lack of standards. CXL helps with that, and then networking technology has improved significantly.

CXL overcomes the need to add more CPUs in a server environment, which is an expensive path to adding performance. The idea with SMART Modular’s AICs is that they can be in an off-the-shelf server.

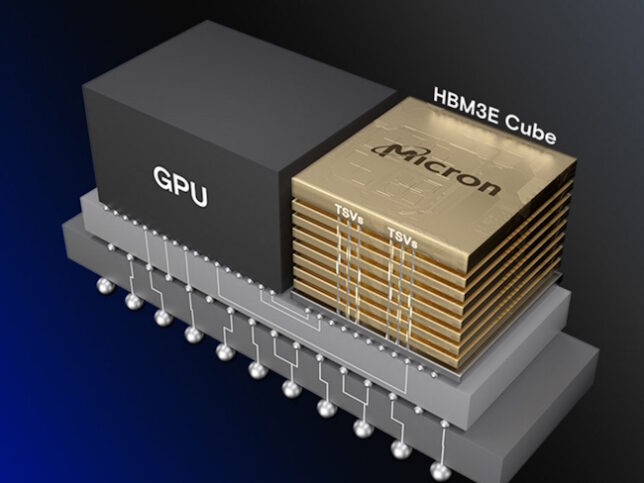

Micron Technology is another early CXL mover, and its CXL CZ120 memory expansion module speaks to the trend toward adding more memory into a server to meet the demands of AI workloads rather than overprovision GPUs.

The company first introduced its CXL CZ120 memory expansion modules in August 2023, and now the module has hit a key qualification sample milestone. The CZ120 has undergone substantial hardware testing for reliability, quality, and performance across CPU providers and OEMs, as well software testing for compatibility and compliance with operating system and hypervisor vendors.

Read the full story on EE Times.

Gary Hilson is a freelance writer with a focus on B2B technology, including information technology, cybersecurity, and semiconductors.